Get ready for a revolution in human-computer interaction. OpenAI just dropped GPT-4o, their most powerful generative AI model yet.

This isn’t your average chatbot upgrade; it’s the “omni” model, which means; it can now handle text, speech, and video – all at once.

Imagine you’re talking to a machine that not only understands your words but can also see what you’re showing and hear the tone of your voice.

This is what GPT-4o brings to the table. With these new features, GPT-4o can interact with us more naturally, thereby bridging the communication barrier between humans and machines.

This rollout, unlike previous releases, will be “iterative,” gradually integrating GPT-4o’s capabilities into OpenAI’s consumer and developer products over the coming weeks.

According to OpenAI CTO Mira Murati, GPT-4o retains the intelligence level of its predecessor, GPT-4, but surpasses it in its ability to handle information across different formats.

OpenAI’s prior “leading” model, GPT-4 Turbo, was trained on text and images. It could analyze both to perform tasks like extracting text from images or describing their content. However, GPT-4o adds the dimension of speech recognition and processing.

This advancement unlocks a range of possibilities

One key improvement is the user experience of ChatGPT, OpenAI’s AI-powered chatbot. While ChatGPT previously offered a “voice mode” that used text-to-speech for the chatbot’s responses, GPT-4o elevates this interaction to a more assistant-like experience.

Imagine this: you can ask a question to the GPT-4o-powered ChatGPT and even interrupt it mid-answer. OpenAI assures that the model delivers responses in “real time” and can even interpret nuances in your voice, generating responses in a range of different emotive styles.

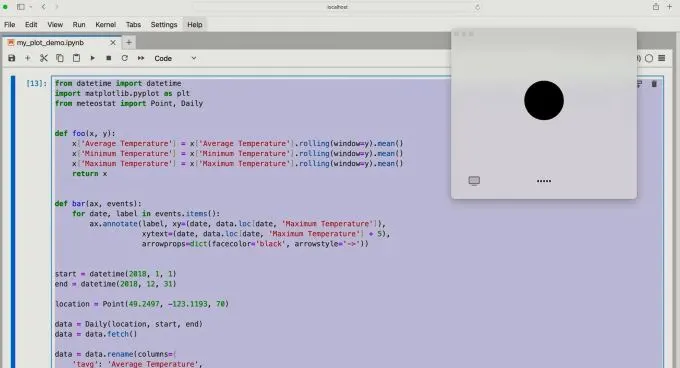

Beyond voice, GPT-4o upgrades ChatGPT’s visual comprehension. Presented with a photo or even a screenshot, ChatGPT can now answer related questions on the fly. This could range from explaining complex software code to identifying clothing brands in an image.

Murati emphasizes that these features will continue to evolve. Currently, GPT-4o can translate text from a photographed menu into another language. The future holds the possibility of ChatGPT “watching” a live sports game and explaining the rules to you in real time.

As these models become increasingly complex, we want the interaction experience to feel more natural and effortless. We want users to focus on collaborating with ChatGPT, not navigating a user interface. For years, we’ve prioritized enhancing the intelligence of these models. GPT-4o represents a major leap forward in user-friendliness.

Murati

OpenAI also boasts that GPT-4o is multilingual, demonstrating improved performance in around 50 languages. The GPT-4o API offers developers a significant upgrade over GPT-4 Turbo. It boasts double the speed, lower pricing, and higher rate limits.

However, due to potential misuse concerns, OpenAI plans to initially offer GPT-4o’s new audio capabilities to “a small group of trusted partners” this coming weeks.

The free tier of ChatGPT now features GPT-4o. Subscribers to OpenAI’s premium ChatGPT Plus and Team plans will enjoy “5x higher” message limits. But upon reaching the limit, ChatGPT will automatically switch to GPT-3.5, an older, less capable model.

OpenAI is also rolling out a series of additional features alongside GPT-4o. These include:

- A refreshed ChatGPT web UI with a more conversational interface and message layout.

- A desktop version of ChatGPT for macOS, allows users to interact with the AI via keyboard shortcuts or discuss screenshots. (ChatGPT Plus users get early access).

- Free access to the GPT Store, a library of tools for creating chatbots based on OpenAI’s AI models.

- Previously paywalled features available to free ChatGPT users, such as memory capabilities (allowing ChatGPT to remember preferences for future interactions) and the ability to upload files, and photos, and conduct web searches for answers.

OpenAI’s GPT-4o signifies a significant step towards a future where human-computer interaction feels more natural and intuitive. This multimodal AI promises to enhance our ability to communicate and collaborate with machines in powerful new ways.