Back in August, Meta introduced an advanced multimodal AI translation model, SeamlessM4T, a groundbreaking technology supporting nearly 100 languages for text and 36 for speech. Enhancing this tool with an updated “v2” architecture, Meta is now striving to elevate conversational translations by infusing spontaneity and expressiveness elements crucial for authentic cross-language communication.

The main addition to SeamlessM4T is “SeamlessExpressive,” a feature designed to transfer the speaker’s expressions seamlessly into the translated speech. This includes pitch, volume, emotional tone (ranging from excitement to sadness or whispers), speech rate, and pauses.

This development holds significant promise, potentially revolutionizing both everyday conversations and content production, as it addresses the historical issue of translated speeches sounding robotic. The supported languages for SeamlessExpressive include English, French, German, Chinese, Spanish, and Italian. However, it’s important you know that the demo page currently lacks Italian and Chinese translations.

The second feature is “SeamlessStreaming,” which initiates the translation process while the speaker is still delivering their speech, enabling faster access to translated content for listeners. Although there is a brief latency of just under two seconds, the innovation lies in the elimination of the need to wait for the speaker to conclude their sentence.

Meta acknowledges the challenge posed by diverse sentence structures in different languages and has developed a specialized algorithm to assess partial audio input, determining whether there is sufficient context to begin generating a translated output or if further listening is required.

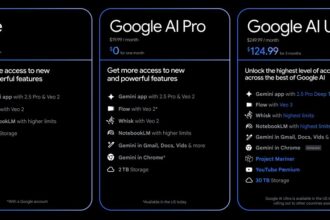

Meta’s advancements in the “Seamless Communication” suite outshine comparable mobile interpreter tools from industry giants like Amazon, Google, and Samsung. While the exact timeline for public availability of these features remains undisclosed, envisioning their integration into Meta’s smart glasses suggests a future where these innovations become even more practical and seamlessly integrated into daily life.