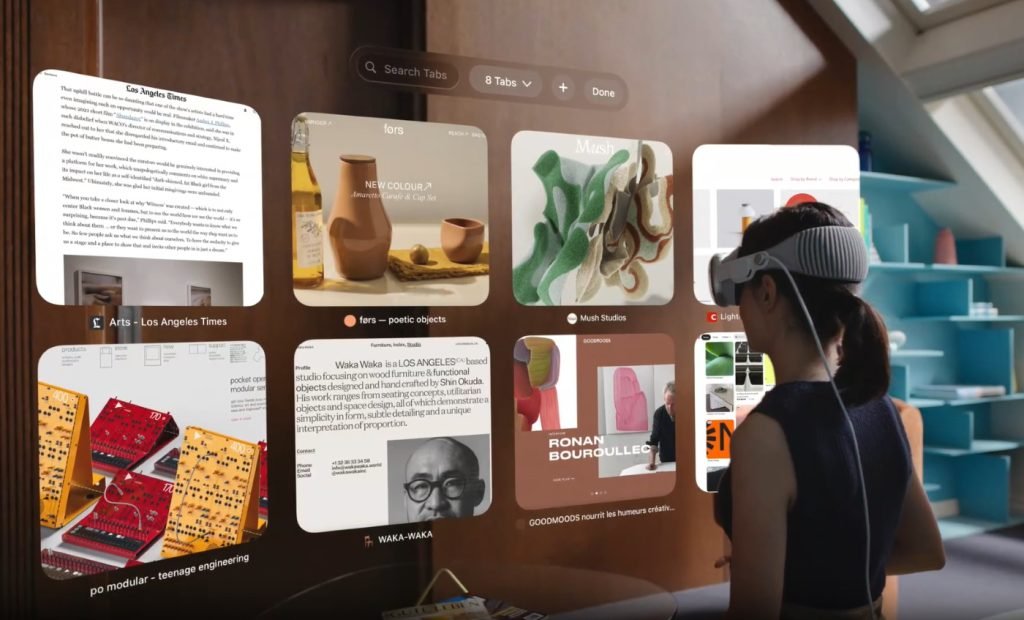

What is the best way to experience your Apple Vision Pro spatial computing? The answer to that question is “by looking at the upcoming apps”. But wait, there are no apps yet. Therefore there is a void that only developers can fill. Yet, developers all have questions about what it means to develop apps for Apple’s newest digital and computing platform.

Apple’s Vision Pro is still months away from consumer release, although it has been tested a couple of times, a “true understanding” of how it works is yet to be experienced. A “true understanding” in this context describes how it would feel to play, communicate, work, and explore in the headset. And this is a piece of information that remains elusive until someone develops an app to play with. This is just the beginning of the problem, as no one has a solid idea of how to build applications designed to work on the new visionOS platform, or how to compute inside a Vision Pro.

In a recent Apple Developers blog post titled, “Q&A: Spatial design for visionOS” based on a Q&A the Apple design team held with the developers at WWDC23 in June. A lot of common questions concerning building apps for Vision Pro were answered, with some answers surprising.

What is the Apple Vision Pro

The Apple Vision Pro is a mixed-reality headset that allows a complete pass-through view that virtually puts AR elements in the real world or complete immersion into the virtual world. The complete immersion is quite impressive, especially in terms of giving the user a virtual body.

“We generally recommend not placing people into a fully immersive experience right away. It’s better to make sure they’re oriented in your app before transporting them somewhere else,” says Apple in the blog post. Meaning Apple does not endorse developers creating apps that fully immerse users in the virtual world.

Apple recommends developers create a ground plane to connect their app to the actual floor. Because, Most times, you will be using the Vision Pro in pass-through or mixed reality mode. This means that you’ll have a connection to your real surroundings, keeping you from feeling disoriented in the app.

3D programming

It is important to note that in Vision Pro, you’re not computing on a 2D plane but in 3D. With this comes changes in the ways of working with the display elements, which most developers are new to. But Apple Designers are solving this problem by applying gaze and gesture control in the VisionOS. Although app development on the VisionOS will be more complex, developers should think about what happens when a user looks at an element. That is in terms of directions and gaze, instead of using a mouse to locate an on-screen element.

The Use of Sounds

It is important to note that the Apple Vision Pro utilizes spatial sound to deliver a surround sound stage, which immerses the user in the environment. For developers who don’t think much about sound, it’s advisable to utilize audio cues in your apps. As this can help users confirm and recognize their actions in the environment, connecting them fully to the spatial computing experience.